Cleaning data in SQL, Python, PowerQuery

In this post, I am going to look at a ‘dirty’ dataset and perform standard data cleaning principles to arrive at the same clean dataset using different tools: A Fabric Notebook, SQL and PowerQuery. The types of transformations I will be doing include schema adjustments, handling special characters, null values, duplicate values and data types.

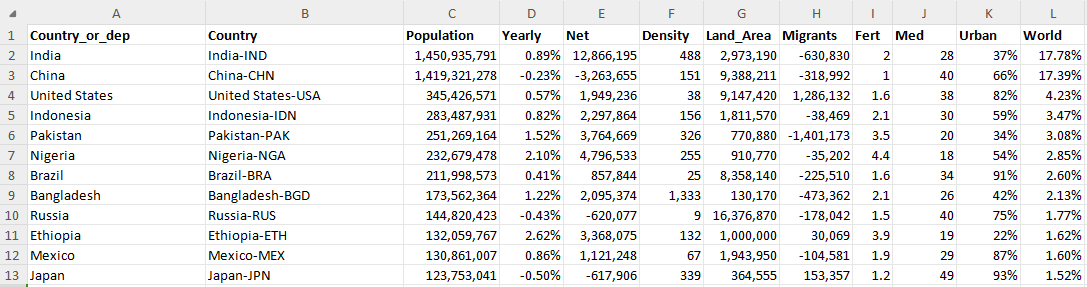

The data I am using for this is a slightly modified version of the population data from worldometers which you can find here. For illustration purposes, I’ve added a column which concatenates 3 letter ISO codes to the Country name (for splitting later) and duplicated a number of rows.

An example of the data below

1. Python

I’ve started by loading the CSV file into a DataFrame, followed by checks of the original row count, identified and showed duplicate rows, and subsequently dropped these duplicates to update and display the new row count. This is purely diagnostic and be safely ignored. Then I’ve proceeded to drop the ‘Country_or_dep’ column and split the ‘Country’ column into ‘Country’ and ‘ISO Code’ using the ‘-’ delimiter. The code then converts relevant string columns to their appropriate numerical types (integers or floats) and ensures that any null values in these numerical columns are replaced with 0.

from pyspark.sql.functions import col, regexp_replace, split

# Load the DataFrame

df = spark.read.csv("Files/country_data.csv", header="True")

# 1. Original row count

original_row_count = df.count()

print(f'Original row count: {original_row_count}')

# 2. Check for duplicates and show them

duplicate_rows = df.exceptAll(df.dropDuplicates())

print('Duplicate rows:')

duplicate_rows.show()

# 3. Drop duplicates and get the new row count

df = df.dropDuplicates()

new_row_count = df.count()

print(f'Row count after dropping duplicates: {new_row_count}')

df = df.drop('Country_or_dep')

# Split the 'Country' column into 'Country' and 'ISO Code'

df = df.withColumn('Country_ISO', split(col('Country'), '-'))

df = df.withColumn('Country', col('Country_ISO').getItem(0)) \

.withColumn('ISO Code', col('Country_ISO').getItem(1)) \

.drop('Country_ISO')

# Change Data Types

df = df.withColumn('Population', regexp_replace(col('Population'), ',', '').cast('float'))

df = df.withColumn('Yearly', regexp_replace(col('Yearly'), '%', '').cast('float'))

df = df.withColumn('Net', regexp_replace(col('Net'), ',', '').cast('float'))

df = df.withColumn('Density', col('Density').cast('float'))

df = df.withColumn('Land_Area', regexp_replace(col('Land_Area'), ',', '').cast('float'))

df = df.withColumn('Migrants', regexp_replace(col('Migrants'), ',', '').cast('float'))

df = df.withColumn('Urban', regexp_replace(col('Urban'), '%', '').cast('float')) \

.withColumn('World', regexp_replace(col('World'), '%', '').cast('float'))

# Replace null values with 0 in all numerical columns

df = df.fillna(0, subset=['Population', 'Yearly', 'Net', 'Density', 'Land_Area', 'Migrants', 'Urban', 'World'])

# Show the transformed DataFrame

df.show()

Output:

Original row count: 191

Duplicate rows:

+--------------+--------------+----------+------+---------+-------+---------+--------+----+---+-----+-----+

|Country_or_dep| Country|Population|Yearly| Net|Density|Land_Area|Migrants|Fert|Med|Urban|World|

+--------------+--------------+----------+------+---------+-------+---------+--------+----+---+-----+-----+

| Yemen| Yemen-YEM|40,583,164| 3.03%|1,192,365| 77| 527,970| -10,482| 4.5| 18| 33%|0.50%|

| Italy| Italy-ITA|59,342,867|-0.26%| -156,586| 202| 294,140| 95,246| 1.2| 48| 72%|0.73%|

| Madagascar|Madagascar-MDG|31,964,956| 2.47%| 769,024| 55| 581,795| -1,795| 3.9| 19| 40%|0.39%|

+--------------+--------------+----------+------+---------+-------+---------+--------+----+---+-----+-----+

Row count after dropping duplicates: 188

+----------------+----------+------+------+-------+---------+--------+----+---+-----+-----+--------+

| Country|Population|Yearly| Net|Density|Land_Area|Migrants|Fert|Med|Urban|World|ISO Code|

+----------------+----------+------+------+-------+---------+--------+----+---+-----+-----+--------+

| Philippines| 115843670| 0.83|952471| 389| 298170.0| -160373| 1.9| 26| 49.0| 1.42| PHL|

| Rwanda| 14256567| 2.16|302096| 578| 24670.0| -15582| 3.6| 20| 18.0| 0.17| RWA|

| Hong Kong| 7414909| -0.37|-27825| 0| 1050.0| -19272| 0.7| 47| 0.0| 0.09| HKG|

| Singapore| 5832387| 0.75| 43297| 0| 700.0| 20011| 1| 36| 0.0| 0.07| SGP|

| Cameroon| 29123744| 2.65|751057| 62| 472710.0| -13892| 4.3| 18| 59.0| 0.36| CMR|

| Portugal| 10425292| -0.05| -5446| 114| 91590.0| 20648| 1.5| 47| 66.0| 0.13| PRT|

| Guyana| 831087| 0.57| 4734| 4| 196850.0| -5407| 2.4| 26| 27.0| 0.01| GUY|

| Malta| 539607| 1.25| 6651| 0| 320.0| 6323| 1.1| 41| 77.0| 0.01| MLT|

| Switzerland| 8921981| 0.58| 51420| 226| 39516.0| 40099| 1.4| 43| 74.0| 0.11| CHE|

| Mauritius| 1271169| -0.19| -2419| 626| 2030.0| -2787| 1.2| 37| 41.0| 0.02| MUS|

| Liberia| 5612817| 2.18|119786| 58| 96320.0| -7779| 3.9| 19| 54.0| 0.07| LBR|

| Gibraltar| 39329| 2.23| 858| 0| 10.0| 598| 1.9| 39| 90.0| 0.0| GIB|

| Burkina Faso| 23548781| 2.27|523005| 86| 273600.0| -25807| 4.1| 17| 33.0| 0.29| BFA|

|French Polynesia| 281807| 0.25| 689| 77| 3660.0| -1261| 1.5| 35| 66.0| 0.0| PYF|

| Kuwait| 4934507| 1.98| 95725| 277| 17820.0| 61624| 1.5| 35| 92.0| 0.06| KWT|

| Suriname| 634431| 0.88| 5545| 4| 156000.0| -1166| 2.2| 28| 62.0| 0.01| SUR|

| Anguilla| 14598| 1.3| 188| 162| 90.0| 109| 1.4| 38| 0.0| 0.0| AIA|

| Botswana| 2521139| 1.65| 40895| 4| 566730.0| -5778| 2.7| 23| 75.0| 0.03| BWA|

| Mali| 24478595| 2.98|709468| 20|1220190.0| -46880| 5.5| 16| 44.0| 0.3| MLI|

| Guadeloupe| 375106| -0.37| -1411| 222| 1690.0| -1765| 2.1| 47| 0.0| 0.0| GLP|

+----------------+----------+------+------+-------+---------+--------+----+---+-----+-----+--------+

2. SQL

This was a little trickier in handling the non-numerical characters in the fields before converting data types: Eventually, I stuck with the TRY_CAST function and ended with the same rowcount and value matches as the Python cleanup above.

-- Drop duplicates

WITH Deduped AS (

SELECT DISTINCT *

FROM [WorldData].[dbo].[country_data]

),

-- Split 'Country' into 'Country' and 'ISO Code'

SplitCountry AS (

SELECT

SUBSTRING(Country, 1, CHARINDEX('-', Country) - 1) AS Country,

SUBSTRING(Country, CHARINDEX('-', Country) + 1, LEN(Country)) AS [ISO Code],

Population,

Yearly,

Net,

Density,

Land_Area,

Migrants,

Urban,

World

FROM Deduped

),

-- Convert data types to FLOAT, handle "N.A" in Urban, and remove commas from Density

Transformed AS (

SELECT

Country,

[ISO Code],

TRY_CAST(REPLACE(Population, ',', '') AS FLOAT) AS Population,

TRY_CAST(REPLACE(Yearly, '%', '') AS FLOAT) AS Yearly,

TRY_CAST(REPLACE(Net, ',', '') AS FLOAT) AS Net,

TRY_CAST(REPLACE(Density, ',', '') AS FLOAT) AS Density, -- Remove commas from Density

TRY_CAST(REPLACE(Land_Area, ',', '') AS FLOAT) AS Land_Area,

TRY_CAST(REPLACE(Migrants, ',', '') AS FLOAT) AS Migrants,

TRY_CAST(CASE WHEN Urban = 'N.A' THEN NULL ELSE REPLACE(Urban, '%', '') END AS FLOAT) AS Urban, -- Handle "N.A" in Urban

TRY_CAST(REPLACE(World, '%', '') AS FLOAT) AS World

FROM SplitCountry

)

-- Replace null values with 0

SELECT

Country,

[ISO Code],

ISNULL(Population, 0) AS Population,

ISNULL(Yearly, 0) AS Yearly,

ISNULL(Net, 0) AS Net,

ISNULL(Density, 0) AS Density,

ISNULL(Land_Area, 0) AS Land_Area,

ISNULL(Migrants, 0) AS Migrants,

ISNULL(Urban, 0) AS Urban,

ISNULL(World, 0) AS World

FROM Transformed;

3. Power Query (M)

In Power Query, this was – of course – much simpler, as all of the transformations could be done no-code through the GUI & menus. I did this twice – once through GUI and another time just as a way to practice my M (with a little help from the documentation for the split snytax and data types)

let

// Load and promote headers

Source = Csv.Document(File.Contents("C:\Users\Jay\Admin\projects\country_data.csv"), [Delimiter=",", Columns=12, Encoding=1252,

QuoteStyle=QuoteStyle.None]),

PromotedHeaders = Table.PromoteHeaders(Source, [PromoteAllScalars=true]),

// Remove duped country column

RemovedCountryDepColumn = Table.RemoveColumns(PromotedHeaders, {"Country_or_dep"}),

// Split the 'Country' column into 'Country' and 'ISO Code'

SplitCountryColumn = Table.SplitColumn(RemovedCountryDepColumn, "Country",

Splitter.SplitTextByDelimiter("-", QuoteStyle.Csv), {"Country", "ISO Code", "Extra"}),

// Remove extra column from spliting

RemovedExtraColumn = Table.RemoveColumns(SplitCountryColumn, {"Extra"}),

// Replace 'N.A' with 0 in 'Urban'

CleanedColumns = Table.ReplaceValue(RemovedExtraColumn, "N.A", "0", Replacer.ReplaceText, {"Urban"}),

// Change data types

ChangedColumnTypes = Table.TransformColumnTypes(CleanedColumns, {

{"Country", type text},

{"ISO Code", type text},

{"Population", Int64.Type},

{"Yearly", Percentage.Type},

{"Net", Int64.Type},

{"Density", Int64.Type},

{"Land_Area", Int64.Type},

{"Migrants", Int64.Type},

{"Fert", type number},

{"Med", Int64.Type},

{"Urban", type number},

{"World", Percentage.Type}

}),

// Remove duplicate rows

RemovedDuplicates = Table.Distinct(ChangedColumnTypes)

in

RemovedDuplicatesThe key takeaway, is that the tools and languages we use are just that – tools. If you are able to do this in one of them, then you know conceptually how to complete the task even if you do not remember the syntax. While I use SQL, Python & Power BI (DAX/M) daily, I still favour certain tools for my data cleaning (as far upstream as possible and as far downstream as necessary). It is a fun challenge, sometimes, to try to replicate the same processes across different tools. It’s a great way to solidify conceptual understanding and how to transfer that cross-platform.

Leave a Reply